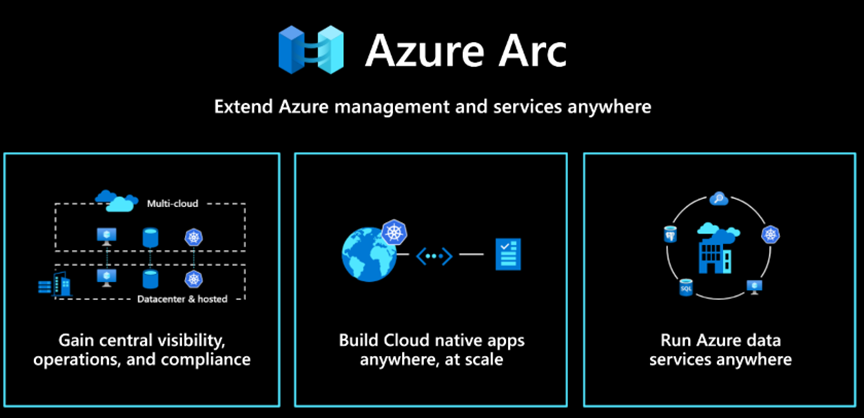

Today, Kubernetes stands as the industry benchmark for orchestrating containers, but most enterprise workloads still run outside of Azure—in private datacenters, edge sites, or multi-cloud environments. Managing those clusters at scale is challenging: governance, compliance, GitOps-based deployments, and monitoring require a centralized control plane.

This is where Azure Arc-enabled Kubernetes shines. It extends the Azure control plane to any Kubernetes cluster, wherever it runs, without forcing migration to AKS. In this blog, we’ll cover three parts:

- Part 1: Functional overview and real-world use cases

- Part 2: Connectivity model with Azure Arc agents

- Part 3: A hands-on lab connecting an on-premises Kubernetes cluster to Azure Arc through an HTTP proxy (using Squid on Ubuntu)

Part 1. Azure Arc-enabled Kubernetes: Features and Real-World Use Cases

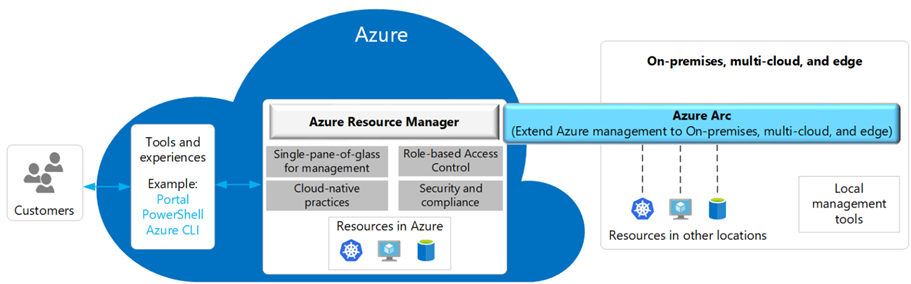

Azure Arc-enabled Kubernetes lets you project external clusters into Azure Resource Manager (ARM) as first-class citizens. Once connected, you gain a unified management experience across hybrid and multi-cloud environments.

Key Capabilities:

- Cluster onboarding into Azure Resource Manager

Your on-prem or third-party cloud cluster (EKS, GKE, Rancher, VMware Tanzu, kubeadm) shows up in Azure as a resource (connectedClusters). - Centralized GitOps-based Configuration

Arc integrates with Flux v2 for declarative GitOps. You can push policy-driven YAML configurations to dozens or hundreds of clusters. - Policy and Governance

Apply Azure Policy for Kubernetes to enforce security/compliance (e.g., block privileged containers). - Unified Security Posture

Defender for Cloud can scan and protect workloads across all Arc-enabled clusters. - Monitoring and Insights

Use Azure Monitor for Containers to collect logs, metrics, and events—even if your cluster never leaves the datacenter. - Data Services Anywhere

Deploy Arc-enabled Data Services (Azure SQL Managed Instance, PostgreSQL Hyperscale) on-prem but managed from Azure.

Typical Enterprise Use Cases:

- Regulated industries where workloads must stay on-prem but still require centralized compliance and audit.

- Edge clusters in retail, telco, or manufacturing, managed at scale.

- Multi-cloud enterprises running EKS/GKE/Tanzu clusters but wanting one governance plane.

- Hybrid dev/test: clusters in Azure and on-prem under a single policy/GitOps umbrella.

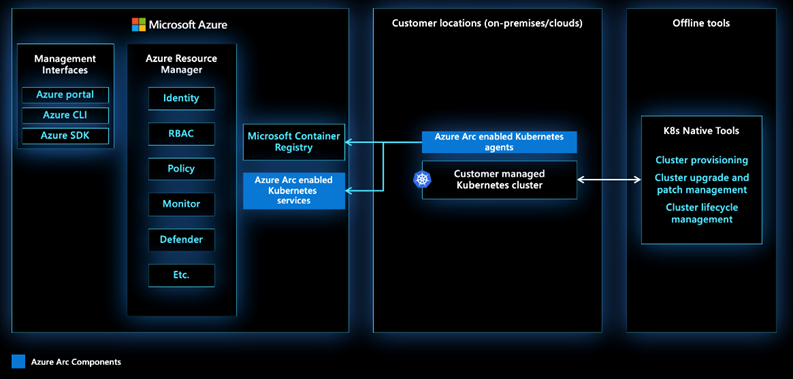

Part 2. Connectivity Model with Azure Arc Agents

When you connect a cluster, Azure Arc installs a set of agents via Helm. These agents:

- Establish outbound HTTPS (443) connections to Azure (no inbound firewall rules needed).

- Run inside a dedicated namespace (

azure-arc) with multiple pods (config-agent, cluster-metadata-operator, flux-operator, etc.). - Project the cluster as a connectedClusters resource in ARM.

Connectivity Flow:

az connectedk8s connectdeploys the Arc agents on the on-prem cluster.- Agents authenticate against Azure using service principal or managed identity.

- Agents periodically sync metadata and configurations back to Azure.

- No customer workload leaves the cluster—only metadata and policy state are exchanged.

This architecture is especially important for enterprises where clusters sit in private networks without direct internet access. In such cases, connectivity is typically funneled through a corporate HTTP proxy or security appliance. That’s exactly what we’ll simulate in our lab

Part 3. Lab Walkthrough: Connecting On-Prem Kubernetes to Azure Arc via Tiny Proxy

In this lab, we’ll simulate a real-world enterprise scenario:

- The Kubernetes cluster is in a private subnet with no direct internet access.

- All outbound traffic must pass through an HTTP proxy (in production, this could be Bluecoat, Zscaler, Palo Alto appliance, etc.).

- In our lab, we’ll use Tinyproxy on Ubuntu to stand in for that proxy.In

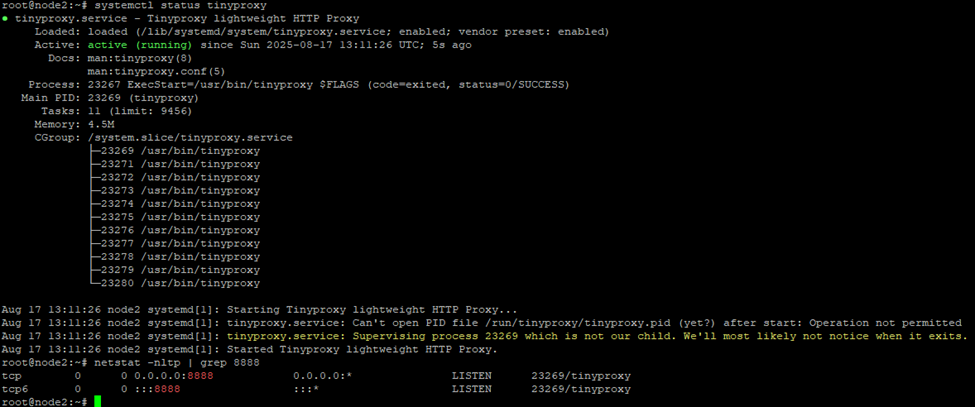

Step 1. Setup the HTTP Proxy with Tinyproxy on Ubuntu

On a VM in your lab environment (reachable from Kubernetes nodes):

sudo apt update

sudo apt install tinyproxy -yEdit /etc/tinyproxy/tinyproxy.conf:

# ===== Tinyproxy Forward Proxy - Lab Configuration =====

# Listen on all network interfaces (you can restrict to a specific IP if needed)

Bind 0.0.0.0

# Port where Tinyproxy will listen

Port 8888

# Maximum number of clients and processes

MaxClients 200

StartServers 5

MinSpareServers 5

MaxSpareServers 20

# Allow rules: only permit traffic from local networks

# Replace with your actual client subnet (example: 10.1.0.0/16)

Allow 127.0.0.1

Allow 10.1.0.0/24

# CONNECT method: allow tunneling only on safe ports

ConnectPort 443

ConnectPort 80

ConnectPort 1025-65535

# Logging

LogFile "/var/log/tinyproxy/tinyproxy.log"

LogLevel InfoRestart service:

sudo systemctl tinyproxy

sudo systemctl tinyproxy

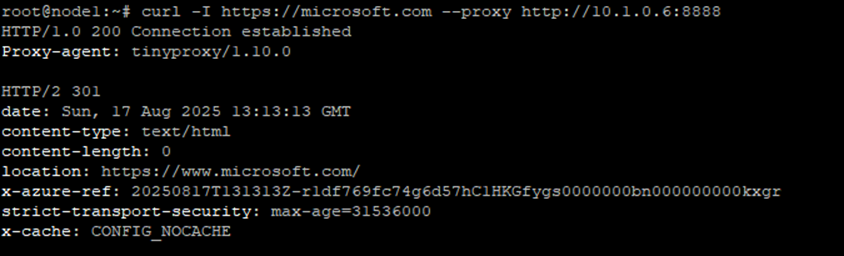

Verify http proxy working on K8s node:

curl -I https://microsoft.com --proxy http://<proxy-ip>:8888

Step 2. Configure Proxy on the Deployment Machine

On the admin node where you run kubectl:

export http_proxy=http://<proxy-ip>:3128

export https_proxy=http://<proxy-ip>:3128Step 3. Connect the Cluster with connectedk8s command

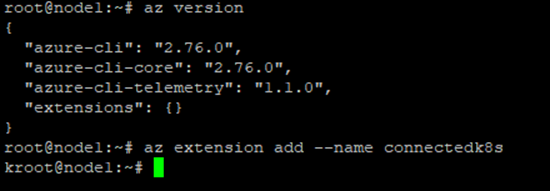

3.0 — Prepare the Deployment Machine

First, make sure the Azure CLI is installed and up to date, then add the Arc extension:

az version

az extension add --name connectedk8s

This extension contains the az connectedk8s commands used to bootstrap the Arc agents into your cluster.

3.1 — Authenticate with Azure

Login from the deployment machine (where kubectl is configured to talk to your on-prem cluster):

az login

az account set --subscription "<your-subscription-id>"This ensures that all resources you create will map to the correct subscription and tenant.

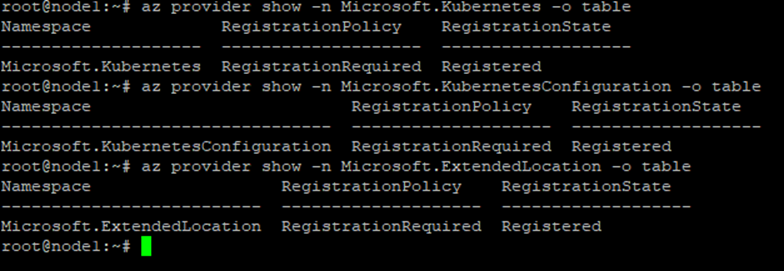

3.2 — Register the Azure Arc Resource Providers

Arc-enabled Kubernetes relies on several Azure resource providers. Register them once per subscription:

az provider register --namespace Microsoft.Kubernetes

az provider register --namespace Microsoft.KubernetesConfiguration

az provider register --namespace Microsoft.ExtendedLocationVerify registration status:

az provider show -n Microsoft.Kubernetes -o tableWhen complete, the RegistrationState will display Registered.

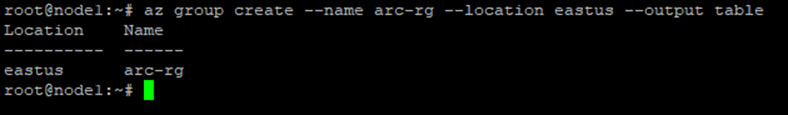

3.3 — Create a Resource Group

Resource groups act as logical containers for your Arc resources:

az group create --name arc-rg --location eastus --output table

Feel free to select a different region based on your governance requirements.

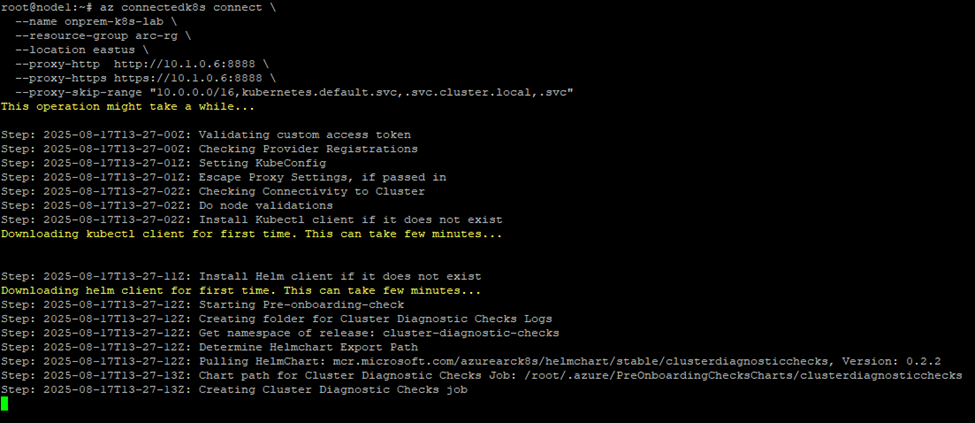

3.4 — Connect the Cluster via Outbound Proxy

Now comes the key step: configuring Arc agents to talk to Azure through the proxy.

Export proxy variables on the deployment machine:

export HTTP_PROXY=http://<proxy-ip>:3128

export HTTPS_PROXY=http://<proxy-ip>:3128Run the connection command:

az connectedk8s connect \

--name onprem-k8s-lab \

--resource-group arc-rg \

--location eastus \

--proxy-http http://<proxy-ip>:3128 \

--proxy-https https://<proxy-ip>:3128 \

--proxy-skip-range "10.0.0.0/16,kubernetes.default.svc,.svc.cluster.local,.svc" Notes:

- Use both –proxy-http and –proxy-https for consistency.

- –proxy-skip-range ensures intra-cluster traffic never goes through the proxy.

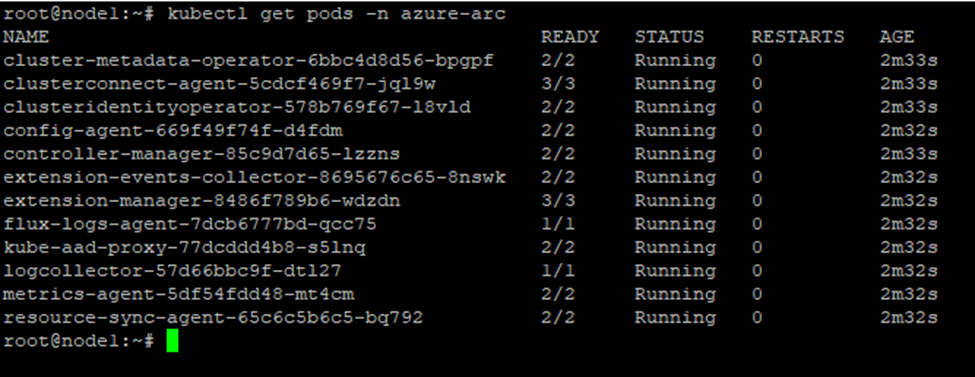

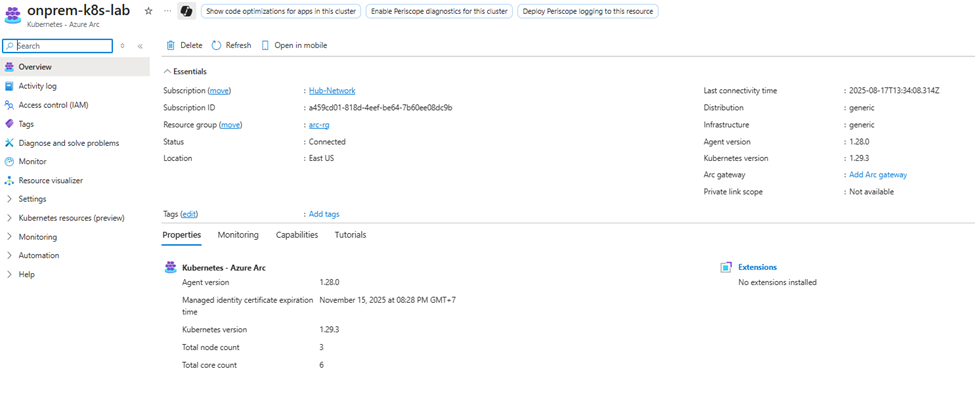

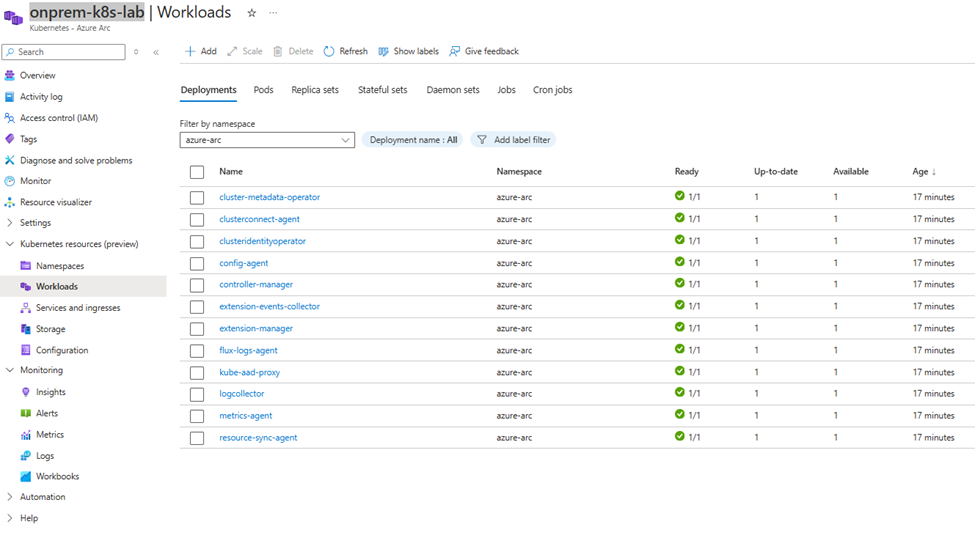

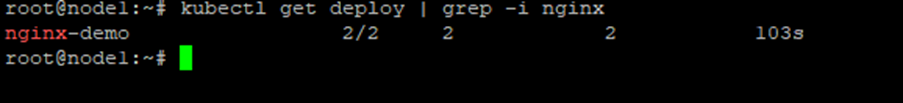

Step 4 — Verify the Connection

Check that the Arc agents are running:

kubectl get pods -n azure-arc

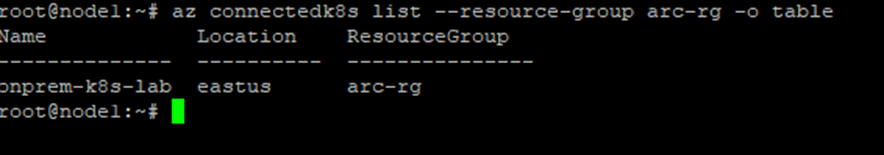

All pods should be in Running state. Then confirm the cluster resource exists in Azure:

az connectedk8s list --resource-group arc-rg -o table

Within a few minutes, you’ll see the connectedClusters resource in the Azure Portal, including cluster metadata such as Kubernetes version, node count, and Arc agent version.

🔥 At this point, your on-prem Kubernetes cluster—sitting behind a proxy—is now visible and manageable inside Azure Arc. You’ve just simulated exactly what many enterprises do in production, but with a lightweight proxy setup in your lab.

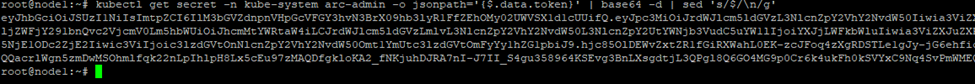

Step 5. Configure Service Account Token Authentication

To allow the Azure Portal to display Kubernetes resources via Cluster Connect, create a service account:

kubectl create serviceaccount arc-admin -n kube-system

kubectl create clusterrolebinding arc-admin-binding \

--clusterrole=cluster-admin \

--serviceaccount=kube-system:arc-adminCreate a service account token:

kubectl apply -f - <<EOF

apiVersion: v1

kind: Secret

metadata:

name: arc-admin

namespace: kube-system

annotations:

kubernetes.io/service-account.name: arc-admin

type: kubernetes.io/service-account-token

EOFExport the token:

kubectl get secret -n kube-system arc-admin -o jsonpath='{$.data.token}' | base64 -d | sed 's/$/\n/g'

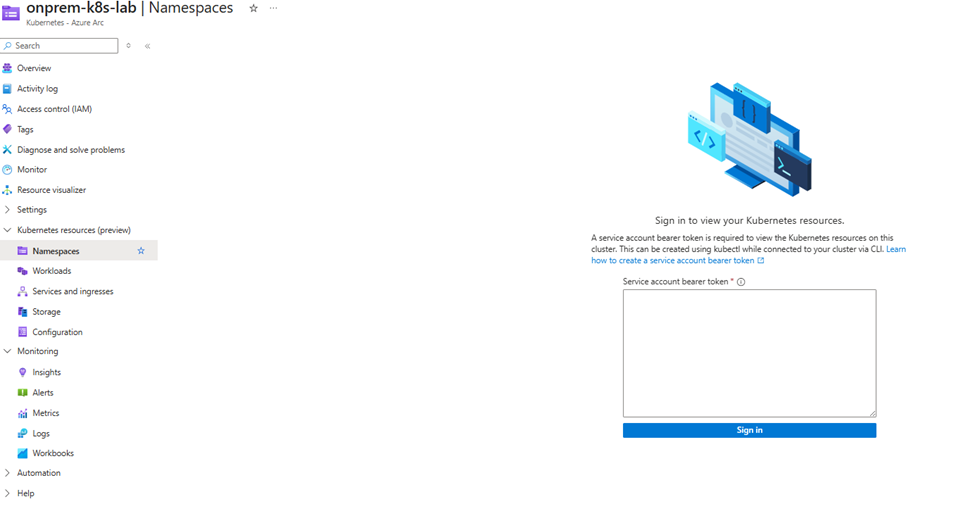

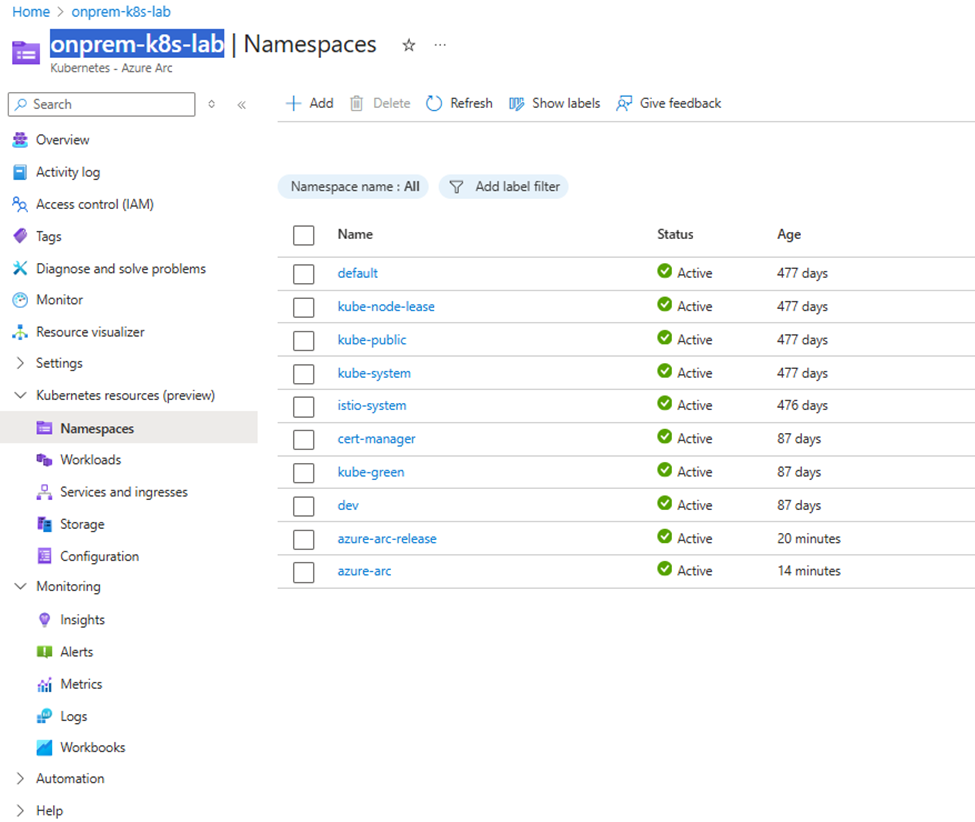

Provide this token when configuring Cluster Connect in the Azure Portal. Once configured, you can use the Azure Portal to browse your on-premises cluster just like a native AKS cluster. Navigate to your onprem-k8s-lab Arc-enabled Kubernetes resource and test the connectivity by exploring different views:

- Namespaces – verify that your existing namespaces are visible.

- Workloads – check Deployments, DaemonSets, and StatefulSets running inside the cluster.

- Services – confirm that ClusterIP, NodePort, or LoadBalancer services are listed.

This ensures that the service account token authentication works correctly and that the Arc agents can project your cluster’s resources into Azure for centralized visibility and management.

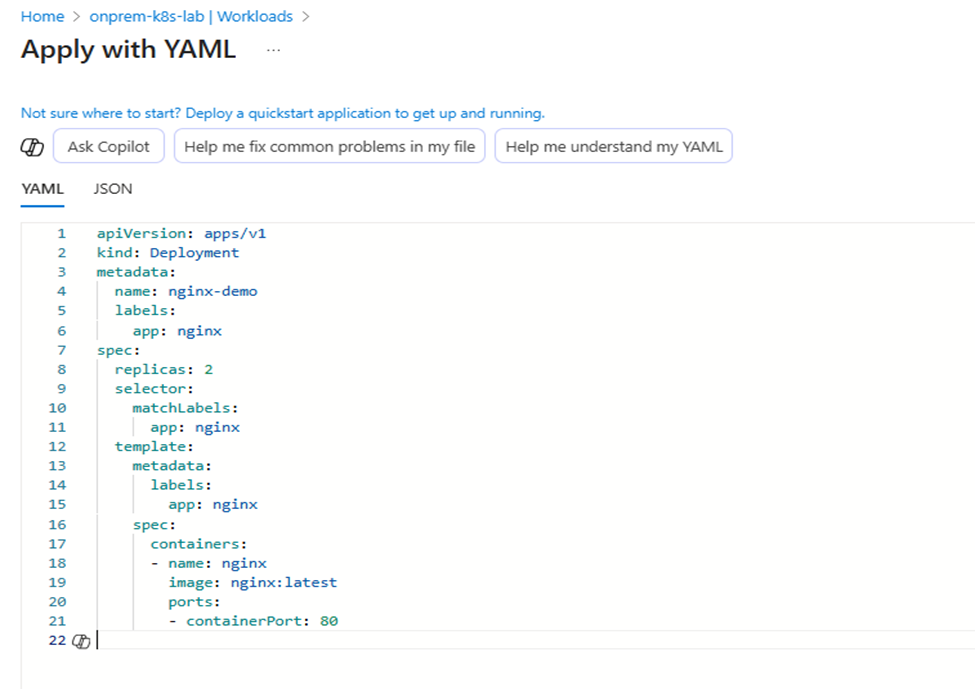

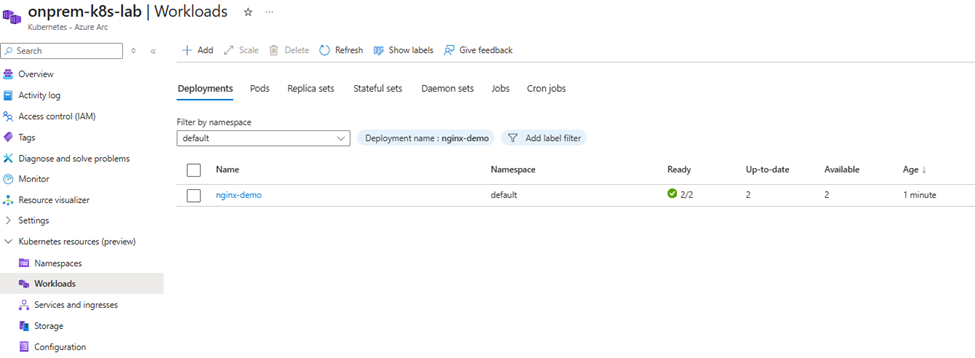

Step 6. Demo: View and Deploy Kubernetes Resources from Azure Portal

- Navigate to your Arc-enabled cluster in the portal.

- Choose Workloads > Add.

- Deploy a YAML manifest (e.g., Nginx Deployment) directly from the portal

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-demo

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

Click Apply in the portal, and the resource will be deployed into your on-prem cluster—managed through Arc.

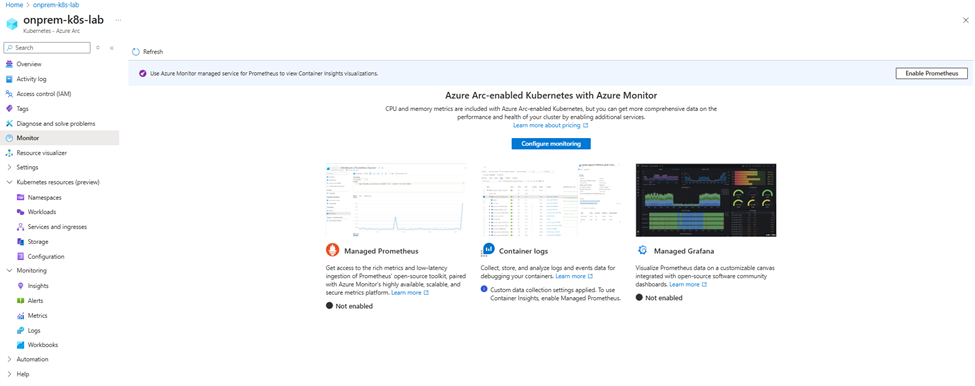

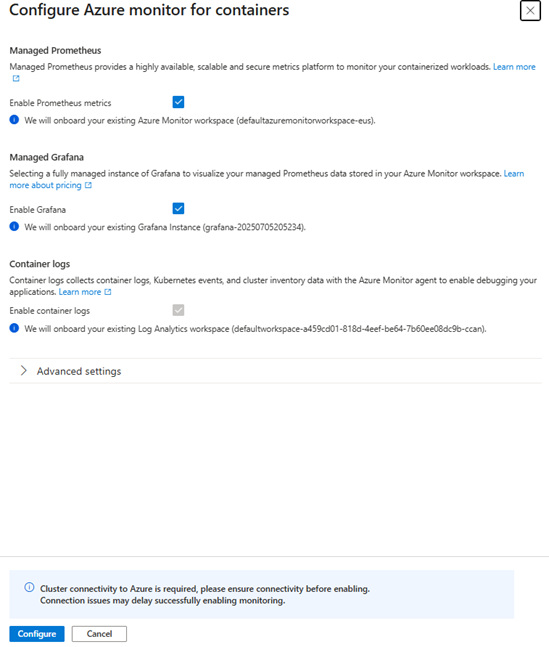

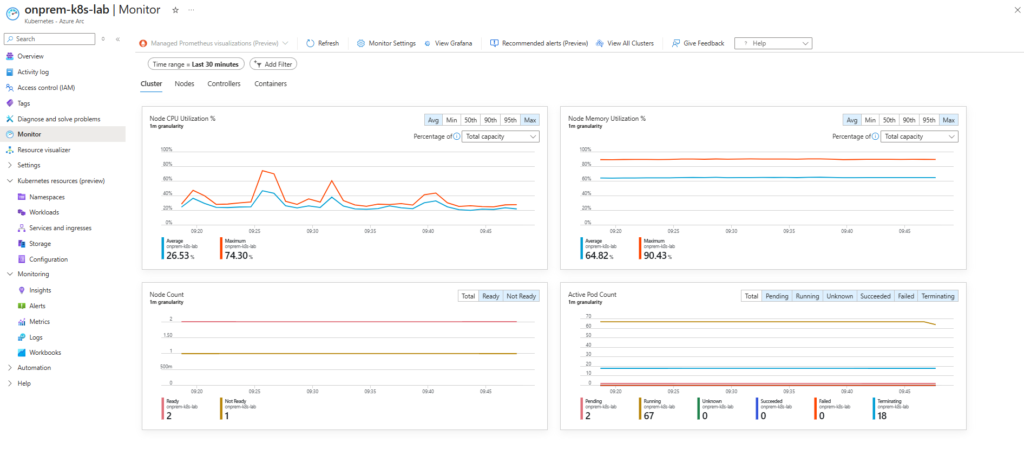

Step 7 — Enhance Your Arc-enabled Cluster with Azure Monitor

Once your on-premises Kubernetes cluster is connected to Azure Arc, the next logical step is to enable Azure Monitor for containers. This integration gives you deep visibility into the health and performance of your workloads — regardless of where the cluster is running.

With just a few clicks in the Azure Portal (or via ARM/CLI), you can:

- Collect metrics and logs from your Arc-enabled cluster in real time.

- Visualize performance through prebuilt dashboards for CPU, memory, and pod utilization.

- Set up alerts that proactively notify you of node failures, pod restarts, or resource saturation.

- Correlate telemetry across hybrid and multi-cloud clusters for a single pane of glass monitoring experience.

This capability turns your on-prem onprem-k8s-lab cluster into a first-class citizen in Azure’s monitoring ecosystem. Instead of stitching together multiple open-source tools, you get enterprise-grade observability delivered through the same Azure Monitor service that powers AKS — but extended via Arc.

By combining Azure Arc with Azure Monitor, you not only unify management but also gain hybrid observability at scale, bridging the gap between cloud-native operations and enterprise infrastructure realities.

Click Configure Monitoring > Configure

🚀 Conclusion

By connecting your on-premises Kubernetes cluster to Azure Arc, you unlock a powerful hybrid control plane that brings cloud-native management, security, and observability to workloads running anywhere. In this lab, we simulated a real-world enterprise setup using a proxy, deployed the Arc agents, validated connectivity, and explored the cluster directly from the Azure Portal. With extensions like Azure Monitor and Azure Policy, your on-prem cluster becomes a true first-class Azure resource, enabling unified operations across datacenter, edge, and multi-cloud environments.

Azure Arc-enabled Kubernetes is more than just a bridge — it’s the foundation for hybrid cloud at scale.

“Why move every cluster to the cloud, when you can bring the cloud to every cluster?” 🌐✨